Hopping Back Online

After migrating the hosting solution from the previous Azure Static Web App, the blog is online again, this time served via a bunny.net CDN pulling from object storage.

For several reasons1 2, I was looking into a new hosting solution for this personal blog. I looked into some options but there were not many providers which offered

- preferably based in Europe

- custom domain with SSL support via Let’s Encrypt (i.e. at low cost, not charging 20€/month for a certificate)

- consumption-based pay-as-you-go model with no/low minimum fee

- CLI-based upload and sync of the static site generator’s output, e.g. by an S3-compatible tool like

aws.

For some reason there were not many providers ticking all boxes (in fact, I believe there isn’t one). I made an attempt with Gcore, but in the end was not successful, because, different than for example Amazon S3, their object storage does not provide a serve as static site feature. In the end, this meant I had to use the nginx rewrite function to make their CDN serve index.html whenever the URL was given as a directory with a trailing slash. However, for the domain itself, I could not get it to work3. Gcore would probably have been the cheapest option, with no minimum fee and the actual usage-based cost being less than 1€/month. In fact, the hosting costs for the first week of trying this out were just 0.06€.

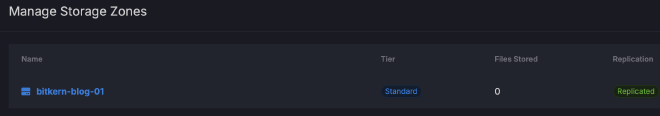

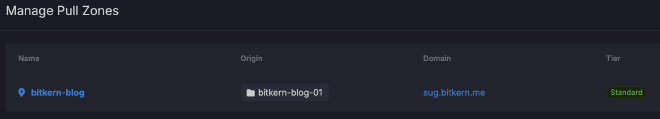

Remaining options included Scaleway and bunny.net. Bunny’s object storage is not S3-compatible, so aws, and consequently hugo deploy does not work, but SFTP via rclone is a viable option4. Now the blog hosting consists of a storage zone for the actual static site content, and a pull zone for the replication in the CDN.

All that remains is to be creative, write some stuff and do

rclone sync public bitkern-bunny: -vv -P --inplace --checksum

I always had an item on my roadmap to include more photos in this blog, even galleries maybe, if I would find a good hugo theme or partial for that purpose. This might then eat quickly into the storage of any free tier of the more comfortable out-of-the-box hosting solutions. Relocating the data intensive stuff into a blob or object storage was an obvious idea, as it would be a much better option to provide some more MB of data at low cost. And since the blog is static only, why not add the entire blog itself to the storage that is the website origin? ↩︎

My Azure account was

fuckedmessed up. I am not sure if Microsoft was responsible for the blunder or if I overlooked a notification email. They are in the process of deprecating classic administrators and migrating users to RBAC. My issue was that the sole user in my directory that Microsoft created when I signed up for Azure, despite being Account Admin and Classic Service Administrator, was not eligible for the Global Administrator role. Ultimately, all proposed ways to migrate to RBAC failed due to insufficient privileges. Since there were no critical ressources at stake (after all, this is just a personal blog), I decided to close shop instead of following through with a nerve-racking support case. ↩︎in Amazon S3, you can enable a feature to host storage as a static site, and additionally, on the Cloudfront CDN there is an option to define a default root document. This latter feature is what I could not get done for Gcore. ↩︎

The actual deployment on bunny.net was quick and easy. Configuring a storage zone, a pull zone, SSL, and a CNAME was straightforward. However, unexpectedly, getting

rcloneto work took me longer than the initial setup. I had never used the tool before, and there were some pitfalls such as your all-knowing AI tool suggesting to use WebDAV as protocol, which failed. Also, the default method of partial file upload with a subsequent renaming operation failed. In the end, SFTP with the--inplaceoption did the trick. Additionally, the default method ofrclone, the file size and modification time based checking for changes to be synced, failed. After each compile with hugo, the complete public folder would be transferred again. Enforcing a validation based on checksum was the solution. ↩︎